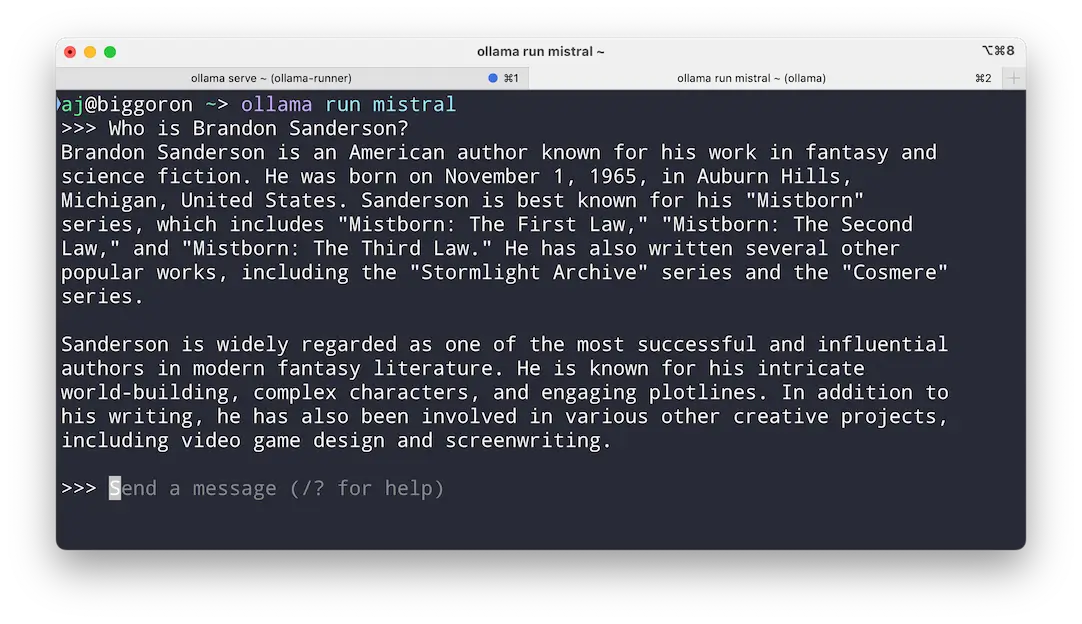

A free, open-source tool to run powerful language models like Llama and Mistral locally on your Mac with ease.

Key Features

- Run LLMs locally

- Supports top models

- Simple model switching

- Mac, Windows, Linux

- Open-source code

- Community support

A free, open-source tool to run powerful language models like Llama and Mistral locally on your Mac with ease.

Want to harness the power of AI language models without sending your data to the cloud—or paying a dime? Meet Ollama, a free, open-source gem that lets you run heavy-hitters like Llama 3.3, Mistral, and Gemma 2 right on your Mac. It’s lightweight, straightforward, and built for local action—install it, pick a model, and start chatting or experimenting, all while keeping your privacy intact. Whether you’re a developer tinkering with APIs, a researcher testing prompts, or just an AI curious soul, Ollama hands you the keys to models like Phi-4 and DeepSeek-R1 with a few clicks. Cross-platform for Mac, Windows, and Linux, and backed by a buzzing GitHub community, it’s the no-fuss way to bring cutting-edge AI to your desktop—fast, free, and fully yours.

A free, offline suite of AI creative tools for Mac, powered by Stable Diffusion, for generating images, videos, and custom models.

A free Mac app to transcribe audio and video locally with OpenAI’s Whisper, supporting 100+ languages and total privacy.

A free, open-source Mac app to run Stable Diffusion locally on Apple Silicon, with fast image generation and privacy.